Game of Life

As another episode on my C adventures, I've decided to implement Conway's Game of Life in C, using raylib, just like I had done with my bouncy balls a few posts earlier.

Once again, the source code is available on my GitHub, so you can check it out if you'd like. If you're running Linux, just make sure to have the raylib library installed, and run make run to compile and run.

If you're on another platform, idk. Good luck.

(In all seriousness: I'll look into making this and bouncy-ball buildable on Windows if anyone is actually interested, I just don't have the means or the patience for that right now. If you do, you can always send in a pull request ;D)

Implementation details

I am going crazy over raylib right now I'm not gonna lie. It's so easy to use compared with the SDL I was using before for these C experiments, everything is simple. Life is good.

This uses two RenderTexture2Ds that are interchanged each frame, the previous frame is used as input for the next one, and my fragment shader, gol.glsl, takes that input, a visual representation of the grid, and spits out the new, updated one. The bonus of using a shader is that it runs on the GPU, which is very speedy for this kind of math.

The simulation itself is nothing special, it's just Conway's Game of Life... But I have a confession to make.

Deadly mistake

I implemented the rules for the game reading from the Wikipedia article, the following is all I had to go off of for guiding me on my implementation:

At each step in time, the following transitions occur:

- Any live cell with fewer than two live neighbours dies, as if by underpopulation.

- Any live cell with two or three live neighbours lives on to the next generation.

- Any live cell with more than three live neighbours dies, as if by overpopulation.

- Any dead cell with exactly three live neighbours becomes a live cell, as if by reproduction.

These rules, which compare the behaviour of the automaton to real life, can be condensed into the following:

- Any live cell with two or three live neighbours survives.

- Any dead cell with three live neighbours becomes a live cell.

- All other live cells die in the next generation. Similarly, all other dead cells stay dead.

And I made a mistake.

I watched the simulation go wrong so many times, what could it be, what could it be?! Here are the things I checked before I found out where my mistake was:

- Maybe it could be an off-by-one error on the for loops?

- Or maybe I misplaced an

iandjon the for loops... - Maybe the math I did to get the pixel size is wrong

- Maybe the Wikipedia article is wrong.

- ...or maybe I made a mistake implementing the rules

- Maybe it's not getting neighbors because of floating point math weirdness

- Maybe the floats don't have enough precision...

- Maybe I shouldn't be using ints?

I tried fixing all these "problems" (except the Wikipedia one that was just me reaching my wit's end,) but the result was the same, and then...

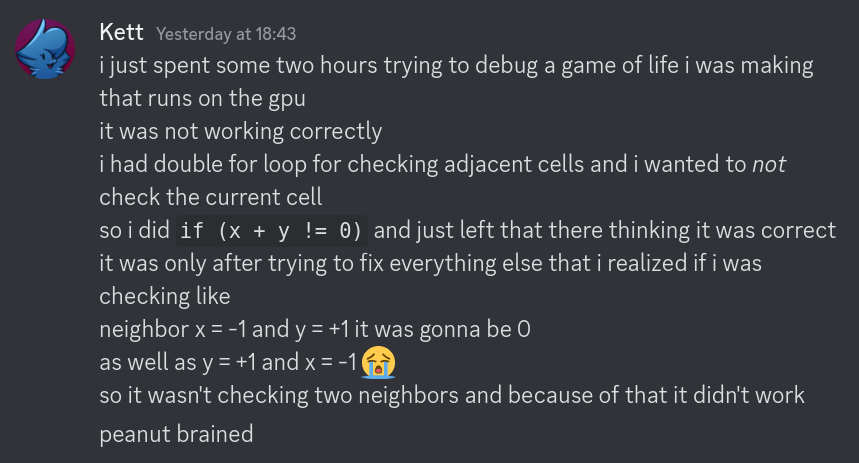

Take a look at line 24 on gol.glsl. And then take a look at what it looked like before.

if (i + j != 0) {

if (sample_position(fragTexCoord + (vec2(i, -j) * pixelsize)) == 1.0) {

count++;

}

I had failed to consider the positions (-1, 1) and (1, -1) in the neighbor check, and that turned a one-hour project into a four-hour one, because that was the last place I went to look for a mistake.

That was pretty embarrassing.

But still,

...

I'm pretty happy with the end result! It runs very snappily, no matter how high I make the resolution, it really impresses me how much parallel math a GPU can evaluate, a 4800x2700 simulation running at 60 frames per second was only using 20% of my Radeon RX 6600's capacity.

Writing things on a lower level like this really makes you appreciate just how powerful modern hardware really is, even if it's not top-of-the-line. I think we've gotten too comfortable with just how much power we have, that we've been wasting it.

I usually advocate for staying comfy, but I think we could do better, y'know? I think we could squeeze more performance out of our math machines.